The Business Impact

Customer Backlash: A Cautionary Tale from Spotify

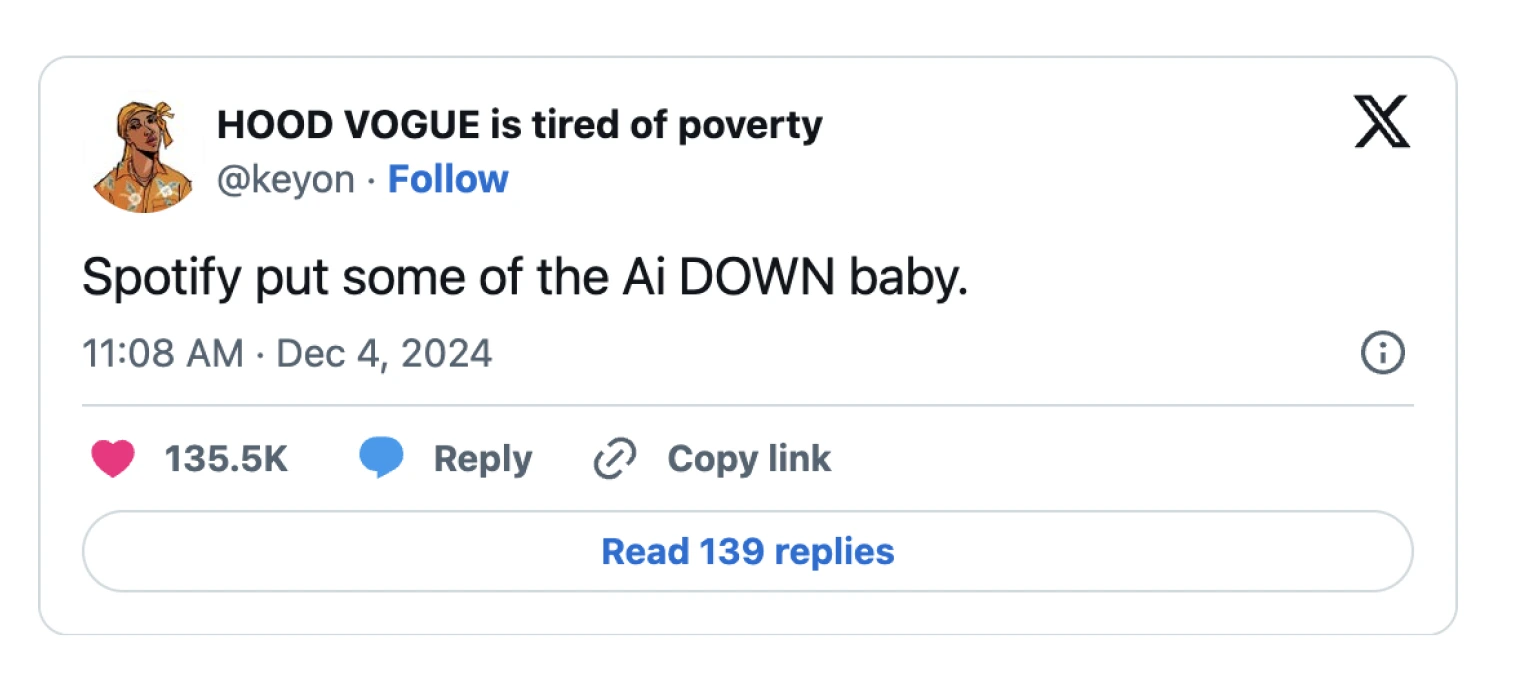

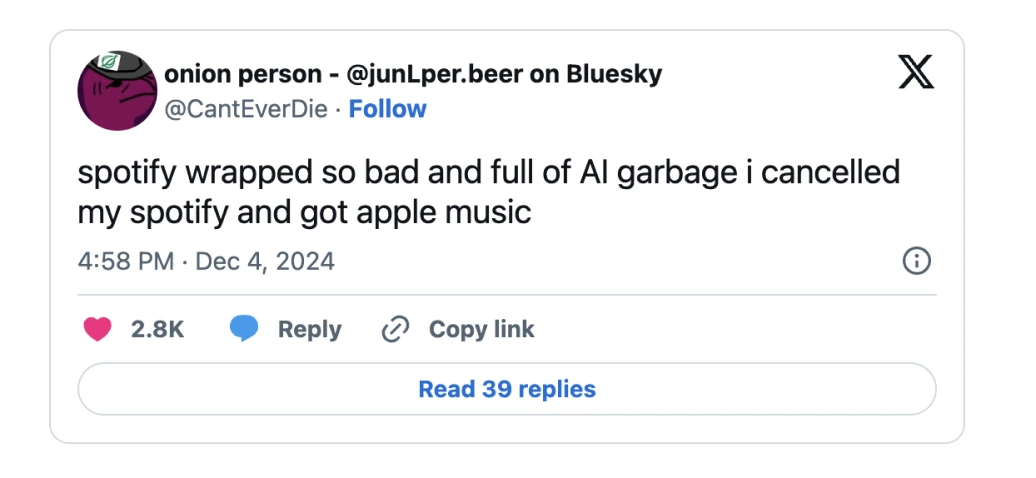

Spotify's 600 million users look forward to the company's annual "Wrapped" program each year. This program gives subscribers insights into their music listening habits by analyzing data using machine learning methods. But last year, listeners blasted Spotify for leaning too heavily on AI. The company transformed subscriber data into AI-driven "podcasts" with artificially generated voices that attempted a conversation. The experience drove some listeners to the point where they left Spotify altogether. The takeaway: using AI was fine when it was used to analyze and visualize data, but problematic when attempting a "human" dialogue.

To get some perspective, we spoke with Candice Greenberg, former Global Brand Lead at Spotify, whose team led the development of Spotify Wrapped. She stressed the importance of combining human curation done by music specialists at the company and AI. "Even though we started to have integrations with AI, we still always had an individual person sitting at the end of the computer thinking, hmm, I like this song, I like that song."

The takeaway: AI can be a powerful tool for analyzing and visualizing data, but using it to simulate human dialogue without clear purpose or finesse risks alienating users. Companies must tread carefully, ensuring that AI applications enhance rather than detract from the customer experience.

Erosion of Trust

Building customer trust with generative AI is particularly challenging. It is widely known that generative AI tools can produce convincing yet false information, leading to public confusion. Forbes Advisor reports that 76% of consumers are concerned with misinformation from AI tools. 70% of those familiar with or using generative AI say the emergence of GenAI content makes it harder to trust what they see online.

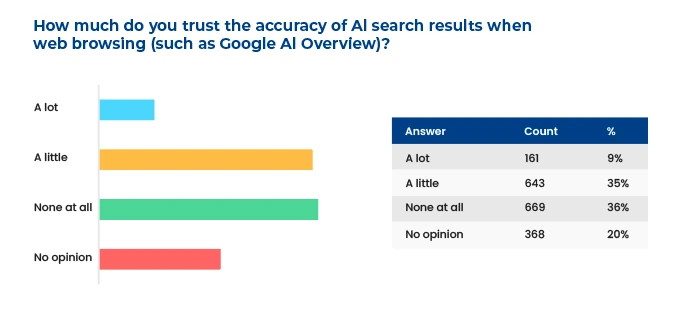

Google has been increasingly using AI-generated results in its search results, yet 61% of consumers do not have much trust in it. According to our conversation with CivicScience, their research has found 35% of consumers have "a little trust" in AI-generated search results, and 36% have none at all.

Unintended Consequences

Google Assistant is an iconic AI tool used worldwide, but the path to a trusted, widely adopted product was not always smooth. To gain perspective, we spoke with Nino Tasca, whose team led the development of Google Assistant. He spent a decade at Google and led the company's product management in speech recognition used in Android and other devices.

“AI models are as good as the training data,” says Tasca, “With Google Assistant, the original training data did not have enough representation of African American voices. We'd have to step in and create specific programs and projects to boost recognition for underrepresented groups, so the models would work better for everyone, not just a subset.”

However, at Google there were attempts to correct historical inequities that ended up backfiring. According to Tasca, “People who were trying to do the right thing got sort of lost because they were trying to put guardrails on the model.”

“If you're asking for a historical image of the Kings of England, it turns out that those were rich white people, which should be reflected in the images shown in Google results for accuracy. And so making a model otherwise, unless asked for, is a bad thing. As soon as you try to manipulate the data in an unnatural way, the product can become worse, and then you lose trust in the product.”

The takeaway? Ensuring fairness and inclusivity in AI requires careful calibration. Overcorrecting or distorting data risks damaging the credibility of AI systems, highlighting the importance of striking a balance between equitable representation and maintaining authenticity.

How Corporations Are Reacting

"AI's growing influence in our daily lives brings attention to the need for its responsible and safe use," according to Dev Stahlkopf, Chief Legal Officer and Executive Vice President at Cisco. She also pointed to Cisco's research in this area. "78% of surveyed consumers feel that it is the responsibility of businesses to employ AI ethically, which underscores the vital relationship between responsible AI and consumer trust."

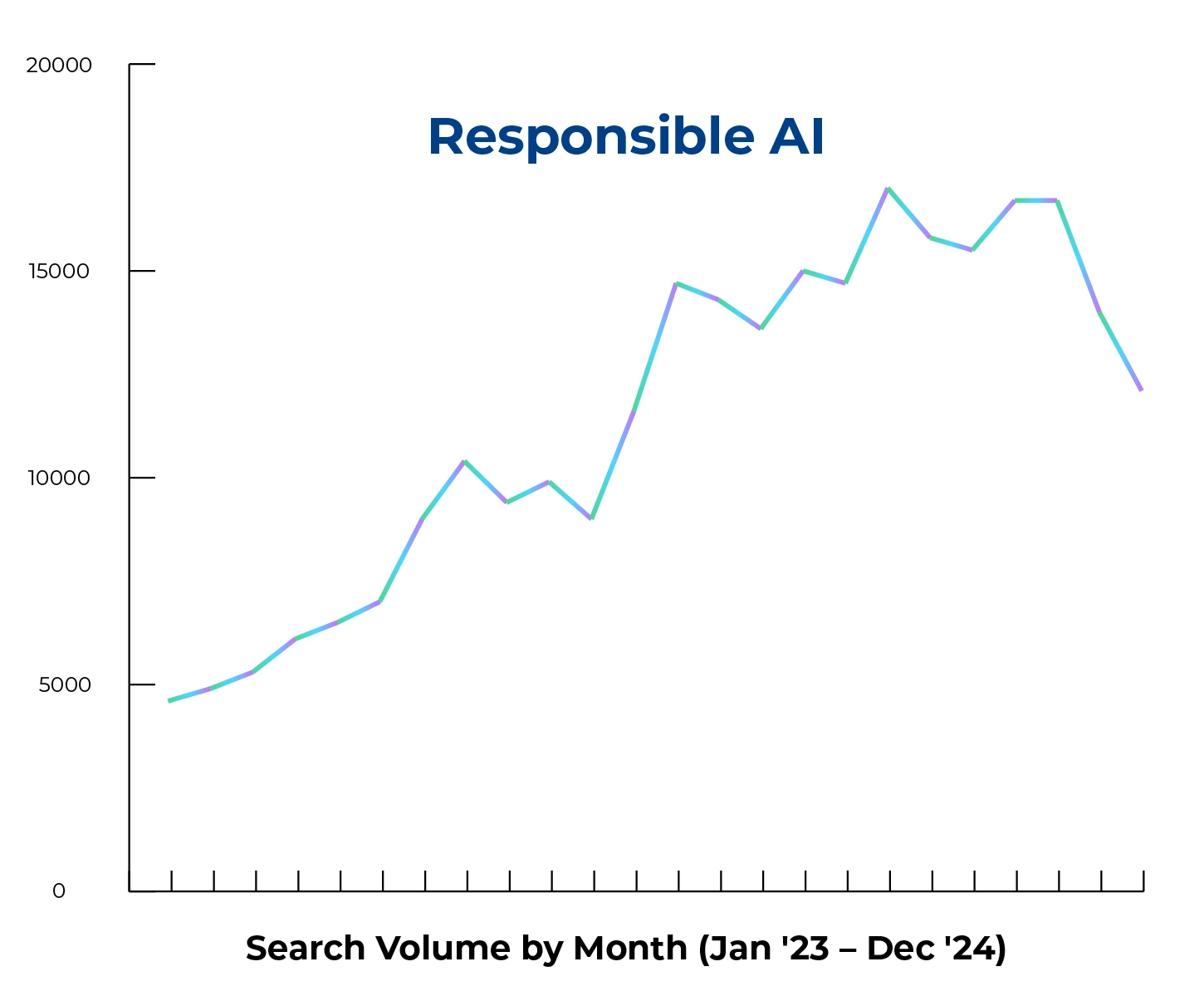

Google searches for "responsible AI" have increased 5X in the past two years. Responsible AI refers to designing, developing, and deploying artificial intelligence (AI) systems in an ethical and accountable manner.

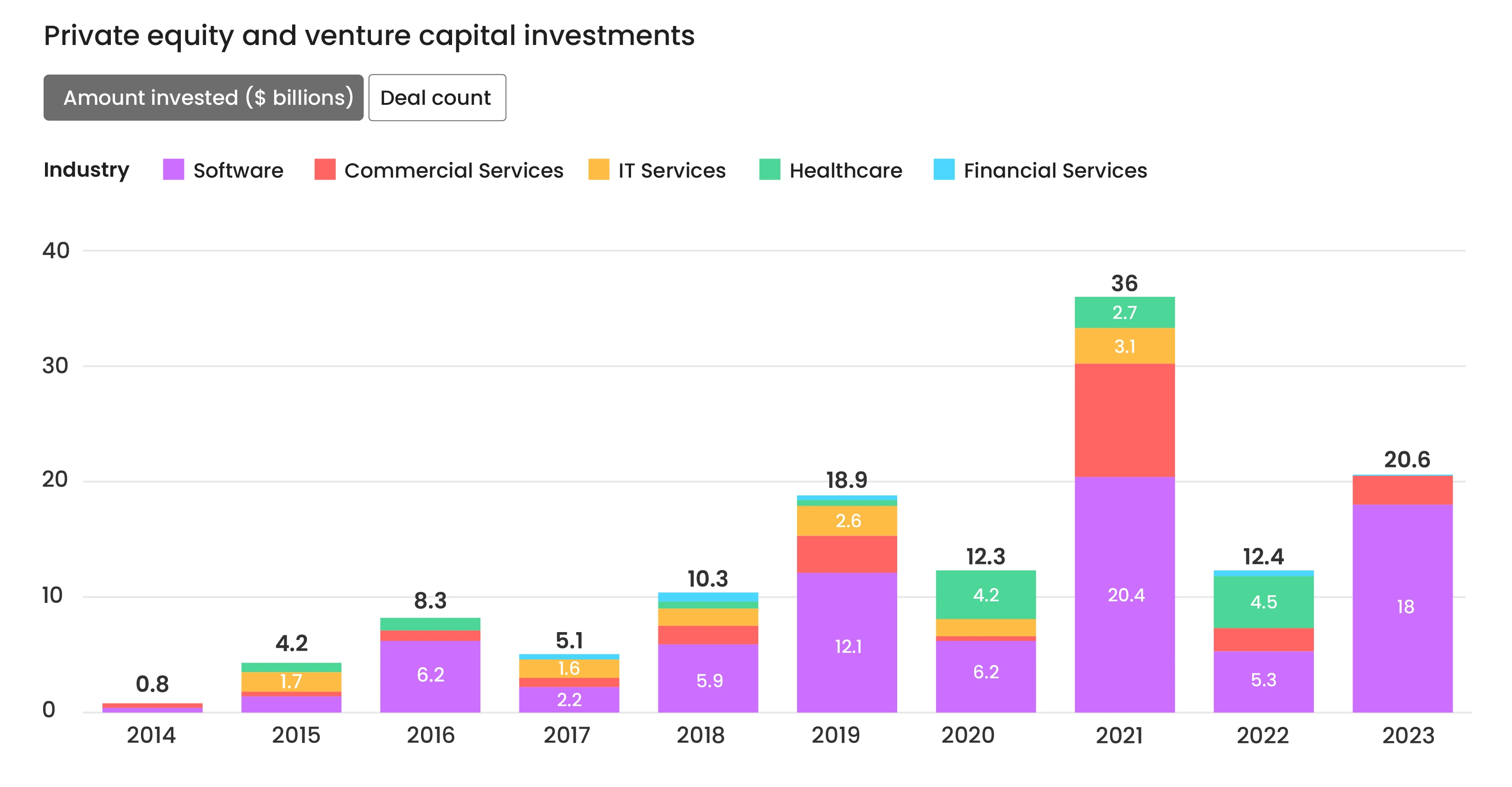

Significant investments have been made in customer experience technologies to meet this need in recent years, with AI playing a significant role.

Investments in companies enhancing the customer experience have surged to $100 billion in the last five years.

Human-Centered Design, UX, and Empathetic AI

Empathy in AI has become a top priority in customer service. Interactions, a leading provider of conversational AI for customer service, is attempting to tackle this issue with its Intelligent Virtual Assistant, which combines AI with human review. FORTUNE 500 companies like Citi use their platform to enable "emotionally aware conversations" by flagging sensitive customer issues that human agents can resolve. It uses sentiment analysis to detect frustration or confusion in a customer's tone. For example, if a user expresses dissatisfaction, the system adjusts its language to acknowledge the issue and offers to transfer the case to a human agent. This dynamic response model has improved first-call resolution rates by 30%.

Smaller companies are also finding ways to balance AI and human interactions to enhance their customer experiences. Consider Groomsday, an e-commerce business specializing in wedding gifts. Chris Bajda, Managing Partner of Groomsday, says, "A big concern is that AI can sometimes feel impersonal. We noticed a 10% dip in customer satisfaction scores when we first introduced chatbots without proper human oversight. Now, chatbots handle simple questions, freeing our staff to focus on complex issues. This keeps us efficient without losing the personal connection our customers value. Since implementing this balance, we've seen a 15% improvement in overall customer satisfaction."

Providing Transparency

Businesses are increasingly incorporating strategies to openly communicate how AI operates, ensuring customers understand its role and limitations. The following examples illustrate different approaches to enhancing transparency.

Salesforce's Einstein GPT provides a framework where users can see how automated customer service tasks are executed. For example, it provides detailed logs on AI decision-making in marketing campaigns, helping businesses track what influences customer engagement.

Airbnb's omnichannel support system integrates AI-driven chatbots with human agents to provide 24/7 service across platforms like social media, in-app messaging, and email. The system's transparency lies in its escalation process: complex queries are flagged for human review, ensuring accuracy and response empathy. This approach has improved customer satisfaction scores by 20% year-over-year. "I believe that without great design and community-based intelligence, AI can only achieve a fraction of its potential. But with them, the sky's the limit," said Adam Cheyer, VP, AI Experience at Airbnb.

Tesla's Autopilot system includes real-time data sharing on performance and safety incidents. By openly publishing this information and engaging users through educational webinars, Tesla has reduced skepticism and encouraged informed usage. McKinsey reports that this transparency strategy has increased consumer trust by 25%, a significant achievement in the autonomous vehicle sector.

Creating New Retail Experiences

In retail, bad customer experiences and high product returns hurt revenue. IKEA is attempting to mitigate those risks with innovations in AI-powered augmented reality. Their AR tool helps manage customer expectations and guides their decision-making by helping customers visualize how furniture would fit into their homes before purchase. Parag Kulkarni, Chief Digital Officer at IKEA, says, "Traditionally, a customer might visit our website, browse a catalog, decide on a product, and then visit a store to finalize the purchase. With AI, we've reimagined this journey. For example, using visual computation technology, customers can now scan their rooms with their smartphones and visualize how IKEA furniture would look in their space." Kulkarni leads the company's digital transformation and AI initiatives.

Parla Retail is "humanizing" e-commerce experiences for retailers. Its platform helps retailers connect customers to representatives with live 1:1 video conversations on their websites, which they claim results in 2X - 3X increases in average order value. "We still live in a world where social engagement is the norm," says Jamila Jamani, CEO of Parla Retail. "As much as we know that AI is part of our everyday toolkit, people still crave community. They are craving social interaction and that's just how we engage as people. I come from a family with three generations in the retail space. For us at Parla, and in the retail industry as a whole (with AI) there is a new prioritization on humans."

In very futuristic fashion, Proto Hologram is pioneering human-like holograms that "beam" celebrities into stores and venues in real-time, giving consumers surprisingly entertaining experiences. Last year, for example, comedian Howie Mandel appeared live in a cameo at New York's JFK Airport via a life-sized hologram machine. So far, major brands, including H&M, T-Mobile, and DHL, have signed on.

Adapting Strategies Globally

Brands are using AI to tailor approaches for specific markets. Coca-Cola's Christmas marketing campaign used AI to create culturally relevant content for specific countries. For example, in Japan, the campaign featured custom animations aligned with local holiday traditions, increasing engagement by 35 percent.

Addressing Generational Differences

Individuals over age 40 often express heightened skepticism towards digital security and are more concerned about companies selling personal data. Yet, younger groups are not without concerns.

A 2024 survey by Time revealed that 80% of U.S. teens believe lawmakers should prioritize addressing AI risks. Most teens (62%) are worried about being tracked through their devices. Nevertheless, 86% of teens reported that their digital experiences have a positive impact on their lives, even though they are increasingly AI-powered.

Coca-Cola's AI-enhanced holiday campaigns appeal to these demographic groups by offering personalized and interactive experiences. For instance, their AI-generated Christmas cards allowed users to customize polar bear designs while ensuring no personal data was stored, effectively balancing innovation with privacy.

Doritos' AI-augmented "crunch-cancellation" campaign used noise-canceling algorithms to humorously address common snacking issues. This playful and relatable approach resonated with younger audiences, increasing brand engagement by 18% within weeks of the campaign's launch.

Cadbury's AI-driven poster generator allowed users to create unique retro designs celebrating the brand's 200th anniversary. Cadbury gained major participation by clearly explaining how AI was used and emphasizing user control, generating over 75,000 posters.

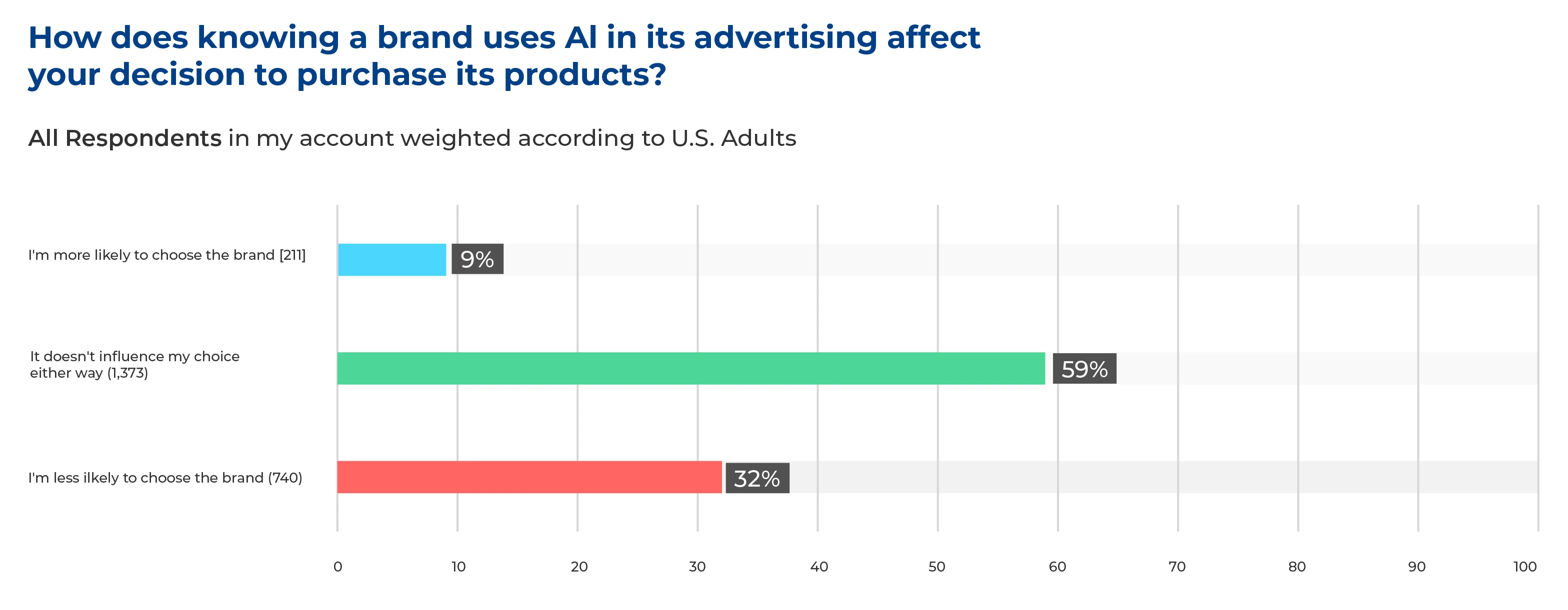

Despite these creative approaches, most consumers are skeptical about the use of AI in advertising. According to CivicScience, 32% are less likely to choose a brand that uses AI in its advertising. The question remains how overt future uses of AI will be in ads.

The Rise of “Practical Adoption” - AI-Powered Consumer Products

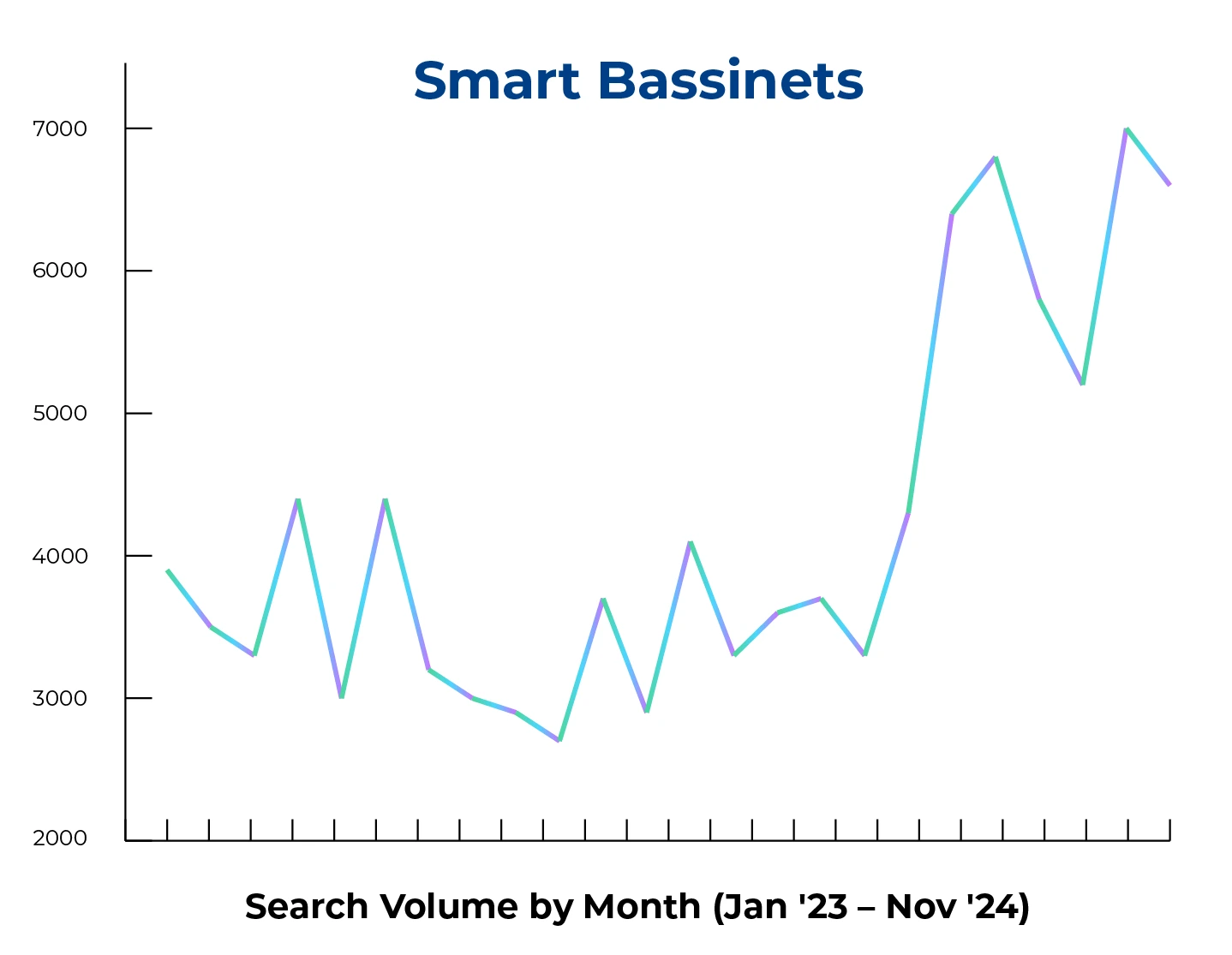

In some areas, consumers are voting with their wallets, and purchases of AI-powered products are increasing. At IDR, we call this "Practical Adoption" since these products use AI to address fundamental human needs like security and wellness. Below are some particularly striking examples of products with dramatic increases in Google searches globally in the past two years.

Smart Bassinets

Public interest in "smart bassinets" has increased 2X in the past two years. A smart bassinet is an advanced baby crib designed to soothe and monitor infants using integrated technology. They often include features such as built-in baby monitors, white noise machines, and automated rocking mechanisms to enhance the comfort and safety of the baby.

Emma Healthcare offers "BebeLucy", an AI smart baby crib that monitors biological signals such as the baby's body temperature, heartbeat, and respiratory rate. It even detects crying and the surrounding environment through machine learning.

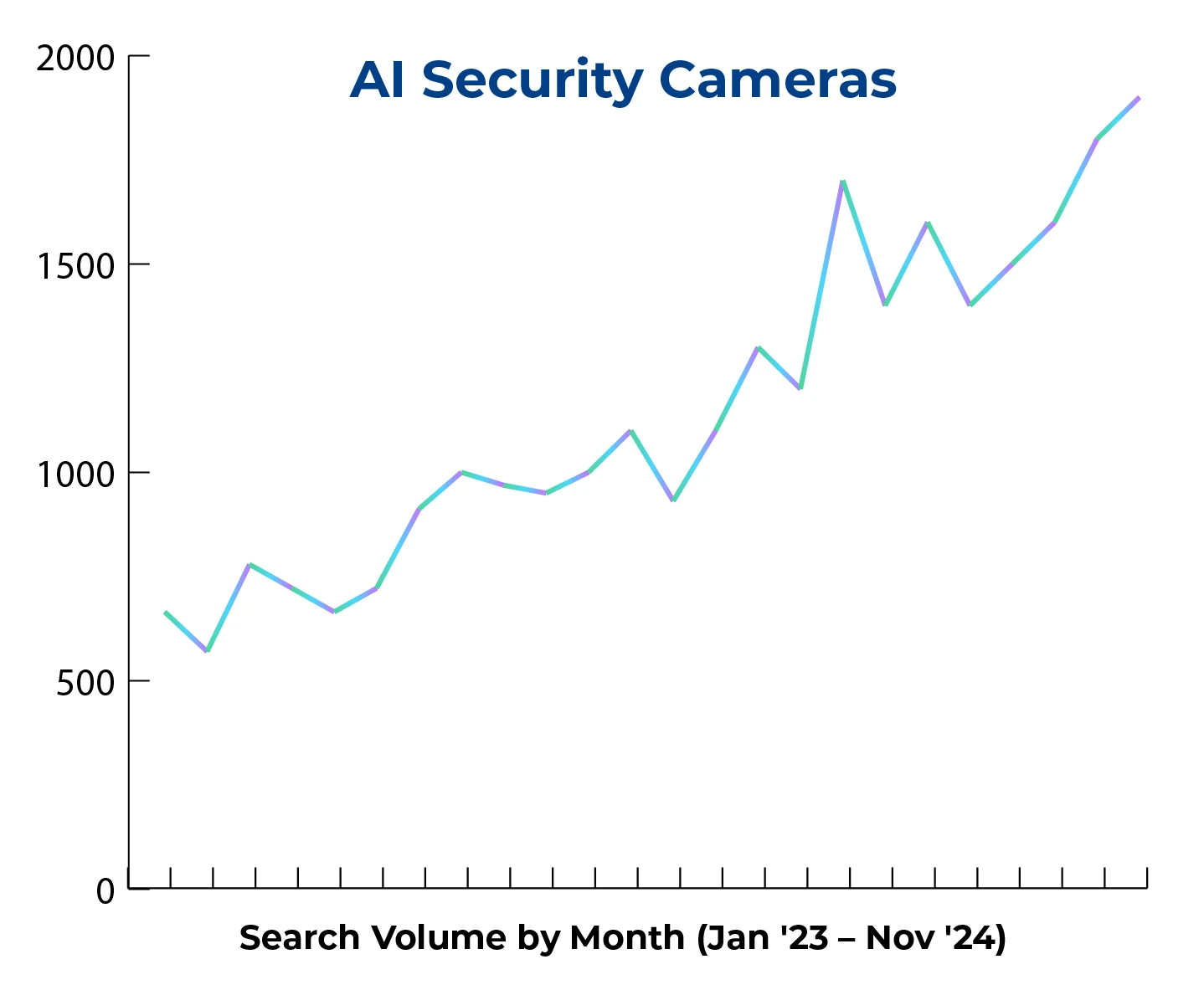

AI Security Cameras

Home and personal security cameras powered by AI are also on the rise. They typically include capabilities such as facial recognition, motion detection, and real-time alerts, allowing for more accurate threat detection and automated responses than traditional cameras.

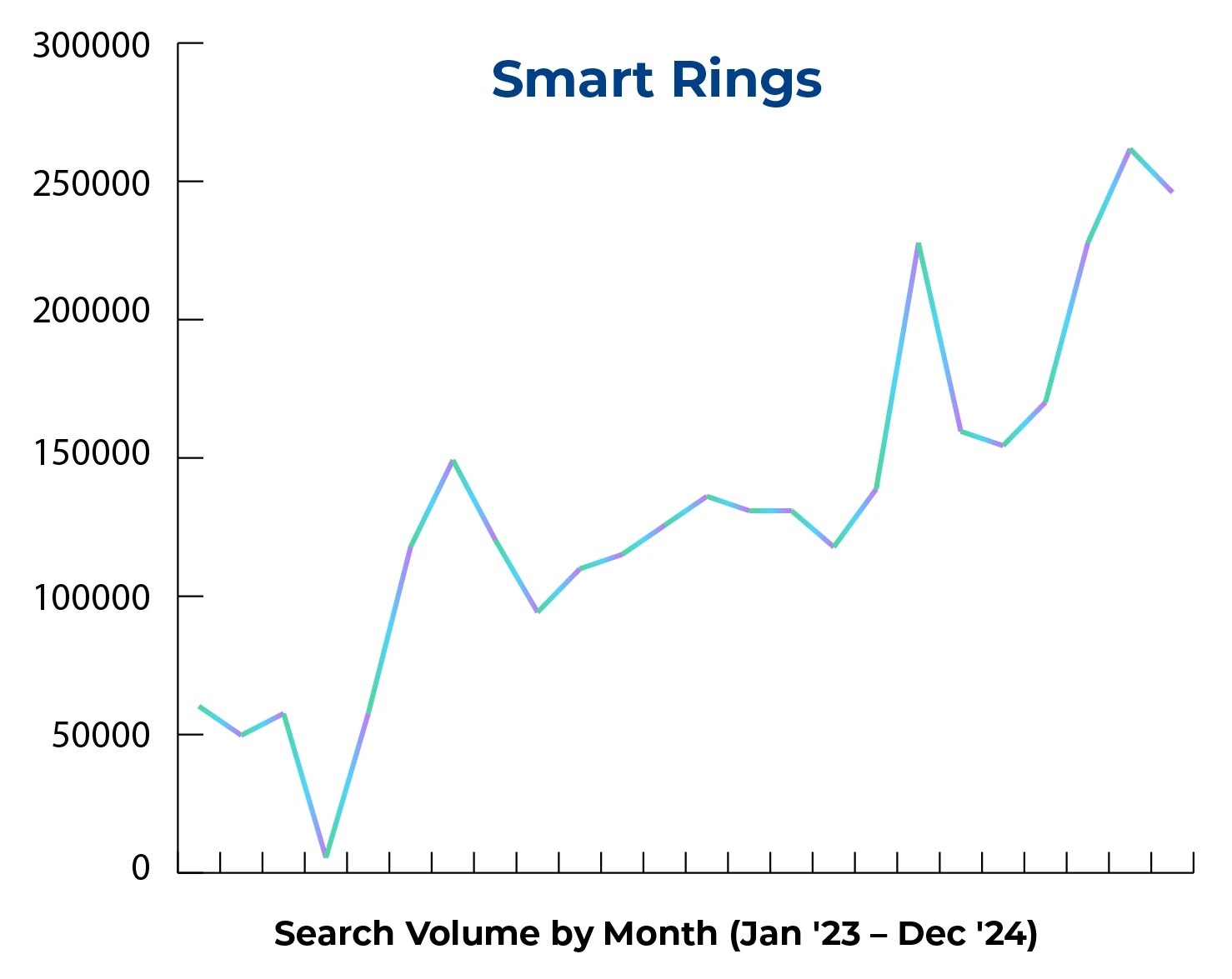

Smart Rings

There's also been a rise in AI-powered rings that use AI to monitor health and fitness metrics such as heart rate, sleep patterns, and activity levels. Google searches for "smart rings" have nearly doubled in the past year.

Some models, like those provided by Oura, also capture your body's stress levels over the day and show them on a timeline. Oura allows customers to understand their physiological responses to various forms of stress, like a high-intensity workout, a night out with friends, or a bad day.

Last year, Oura launched Oura Advisor, an AI assistant that provides wellness tips, answers questions, and analyzes data designed to complement the Oura Ring experience. For instance, when you wake up in the morning, you get a readiness score with some recommendations, like telling you to take it easy or that you're ready for a jam-packed day.

Corporate Governance and Decision-Making

Controls and Policies

To guide their approaches to ethical, trustworthy use of AI, companies are establishing frameworks and other approaches to governance. John Thomas, Vice President and Distinguished Engineer at IBM Technology Expert Labs, said, "IBM has put in place a centralized and multi-dimensional AI governance framework centered around the IBM internal AI ethics board. It supports both technical and non-technical initiatives to operationalize the IBM principles of trust and transparency."

Keep Efforts Focused and Compartmentalized

Given consumer concerns about the use of their audio recordings, Google developed a separate language model for "Hot Word Detection", which triggers Android devices to start recording your voice when questions are posed to the Google Assistant. This model was kept separate from other models and training data that powers Google recommendations. According to Tasca, "We have a very, very small model that only listens for those hot words and nothing else. And so it doesn't record. It's just on the device the whole time. Nothing is being sent to the cloud."

Speaking more broadly, James Manyika, Senior Vice President for Research, Technology, and Society at Google, adds: "We are convinced that the AI-enabled innovations we are focused on developing and delivering boldly and responsibly are useful, compelling, and have the potential to assist and improve lives of people everywhere — this is what compels us."

Predictions for 2025 and Beyond

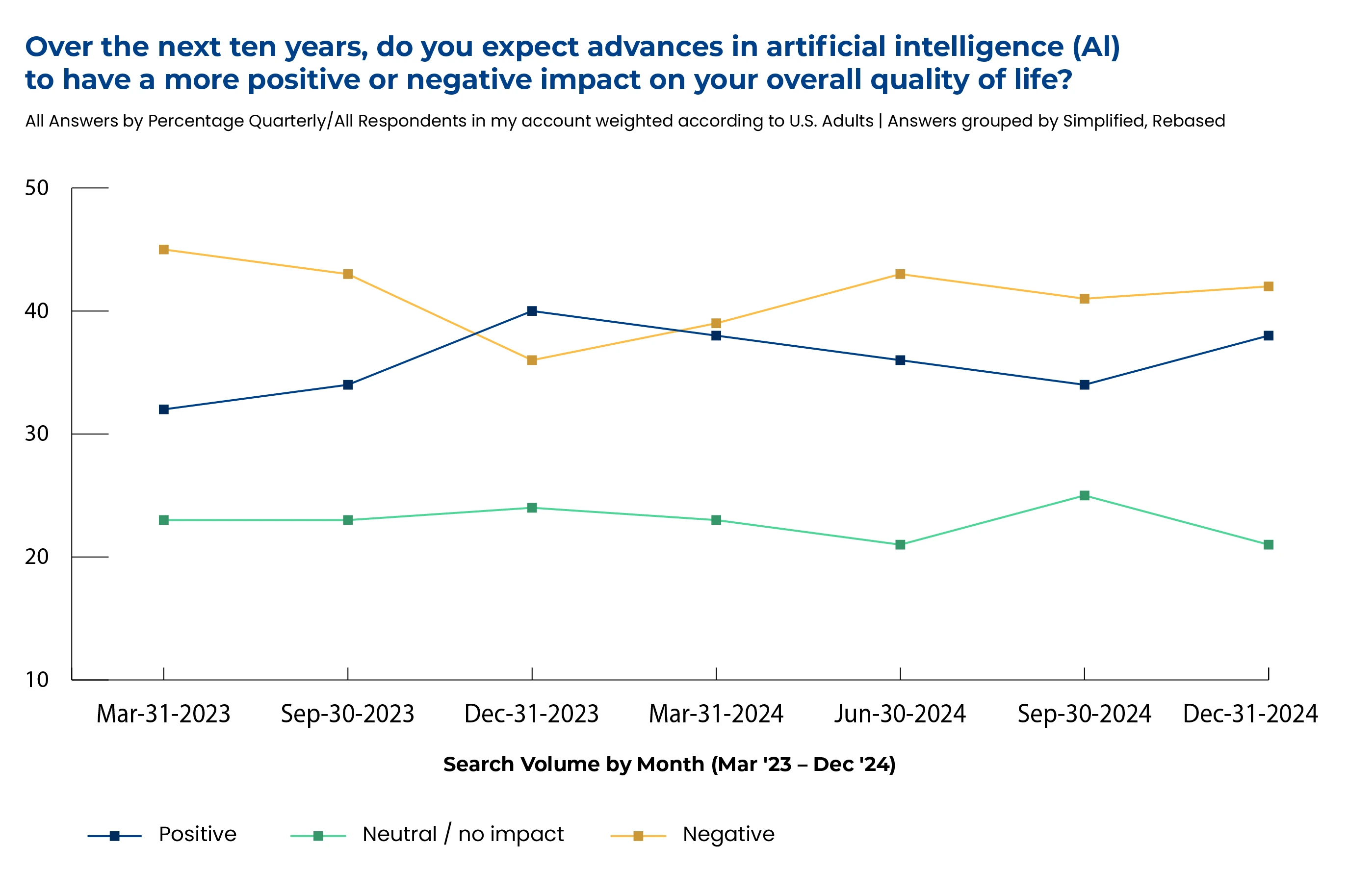

Despite corporate attempts to infuse AI into their operations and customer experiences, consumers are increasingly skeptical about the future, according to a tracking study of 57,000 people by Civic Science. In December 2024, 42% of consumers felt that AI will have a more negative impact on their quality of life over the next ten years vs. 36% in December 2023. These predictions are based on current trends and assumptions from the interviews and research we conducted, and outcomes may vary due to unforeseen circumstances or changes in the industry landscape.

More Training of AI with Synthetic Data

John Thomas at IBM stresses the necessity of trustworthy data in AI systems. "Trustworthy AI has to start with trustworthy data. Only by automating the discovery, use, and maintenance of their data can organizations ever hope to truly unlock their potential."

Yet, Tasca says, "I don't think the core models are going to get that much better. I feel like we're still based on the transformer architecture. We've hit the limits with the amount of data that's needed. I think there needs to be a eureka moment of another transformative change in model architecture to reach the next level." He mentions that the eureka moment may lie in breakthroughs with synthetic data, which is "manufactured" algorithmically instead of relying on data from the real world. It is being used by AI to produce better models. In other words, AI is creating data to fuel itself, which brings up a host of issues that technology innovators and companies will need to navigate carefully.

Risk Management as a Corporate Priority

Rigorous assessment and validation of AI risk management practices and controls will become non-negotiable. Even if the specifics of AI assessment and validation are not mandated, stakeholders will demand it — just as they demand confidence in other decision-critical information (such as financial results) or cybersecurity or privacy practices. According to Jennifer Kosar, PwC's AI Assurance Leader, "successful AI governance will increasingly be defined not just by risk mitigation but by achievement of strategic objectives and strong ROI."

AI Gets Emotional

Companies like Hume AI are powering experiences with empathic AI, such as voice assistants that can detect over 50 human emotions, including adoration, confusion, fear, and relief.

As AI becomes more integrated into daily life, technologies like natural language processing and emotion recognition are expected to drive acceptance.

Sustainability of AI Becomes Front and Center

"AI is incredible, but it's also massively ecologically damaging", says Candice Greenberg, the former Global Brand Lead at Spotify. "The amount of power that it uses is energy intensive and massively ecologically damaging. Gen A is coming fast and furious and they don't play by the same rules as any of the other generations. They expect the companies that they support and work for to actually do good and to literally put their money where their mouth is. They're not going to buy brands that say they're doing something but aren't, and so I feel like with the rise of Gen A and the fact that they have more purchasing power and the fact that there's soon to be the new workforce of things, companies are going to have to wake up and change their operating model."

The Bottom Line:

Human-Centric Experiences with AI Benefits

Consumer skepticism toward AI stems from valid concerns about privacy, transparency, and empathy. However, innovations in human-centered design, transparent implementation, and empathetic AI systems demonstrate the potential to bridge these gaps. By addressing the generational and cultural nuances of AI perception, companies can tailor their approaches to foster trust and engagement.

The future of AI lies in its ability to seamlessly integrate into daily life while addressing ethical considerations and user concerns. By prioritizing education, clear communication, and user control, businesses can transform skepticism into confidence, ultimately unlocking the full potential of AI-driven experiences.

Considerations for Expert Interviews

1. Privacy Concerns and Consumer Confidence

Evaluate how privacy concerns influence commercialization and market potential.

What strategies have you employed to turn strong privacy practices into a competitive advantage?

How do consumer concerns about data misuse affect adoption rates for your AI products?

What investments are required to ensure data privacy without compromising scalability or profitability?

2. Transparency and Market Differentiation

Explore how transparency can drive market adoption and distinguish products.

How does transparency in AI operations influence buyer decisions in your target markets?

What steps do you take to differentiate your products by emphasizing explainability and trustworthiness?

What marketing strategies do you use to communicate your AI's transparency and reliability to customers?

3. Ethical AI Implementation and Brand Value

Assess how ethical AI practices contribute to market growth and risk mitigation.

How do ethical AI initiatives enhance your brand reputation and commercial appeal?

What ethical challenges have you encountered during product commercialization, and how were they resolved?

How do you address customer concerns about AI bias to minimize reputational risks?

Are there market segments where ethical AI considerations play a larger role in purchasing decisions?

4. Corporate Governance Considerations

Examine how governance frameworks can reduce risks and enhance market positioning.

What frameworks and approaches to governance can be adopted to guide company approaches to gain benefits of AI while mitigating risks?

Have your AI governance practices helped you succeed in highly regulated markets?

5. Human-Centered AI Design for Commercial Success

Explore how human-centric design enhances market appeal and reduces barriers to adoption.

How do you incorporate consumer feedback into the design to maximize market fit for your AI products?

How do you measure the commercial impact of making AI systems more empathetic and user-friendly?

What role does cultural adaptability play in designing AI for global markets?

Experts Featured in This Report

James Manyika, Senior Vice President for Research, Technology and Society at Google

Dev Stahlkopf, Chief Legal Officer and Executive Vice President at Cisco

John Thomas, Vice President and Distinguished Engineer at IBM Technology Expert Labs.

Nino Tasca, Chief Product Officer, Northstar Travel Group and former Director, Google Product Management - Speech

Candice Greenberg, former Global Brand Lead at Spotify

Jennifer Kosar, AI Assurance Leader, PwC

Adam Cheyer, VP, AI Experience at Airbnb

Parag Kulkarni, Chief Digital Officer at IKEA

Chris Bajda, Managing Partner of Groomsday

Disclaimer

This report is for general informational purposes only. While efforts have been made to ensure accuracy, we make no guarantees regarding the completeness, reliability, or suitability of the information provided. Actual outcomes may vary significantly due to factors beyond our control, and we disclaim all liability for any loss or damage resulting from the use of or reliance on this report.